The correct way to load custom forms JS is to put it in separate .js file, after and outside* the form embed code:

<!– standard form embed -->

<script src="//954-TTG-937.mktoweb.com/js/forms2/js/forms2.min.js"></script>

<form id="mktoForm_846"></form>

<script>MktoForms2.loadForm("//954-TTG-937.mktoweb.com", "954-TTG-937", 846);</script>

<!– /standard form embed -->

<!– custom form behaviors JS and CSS -->

<script src="https://www.example.com/custom-form-behaviors.js"></script>

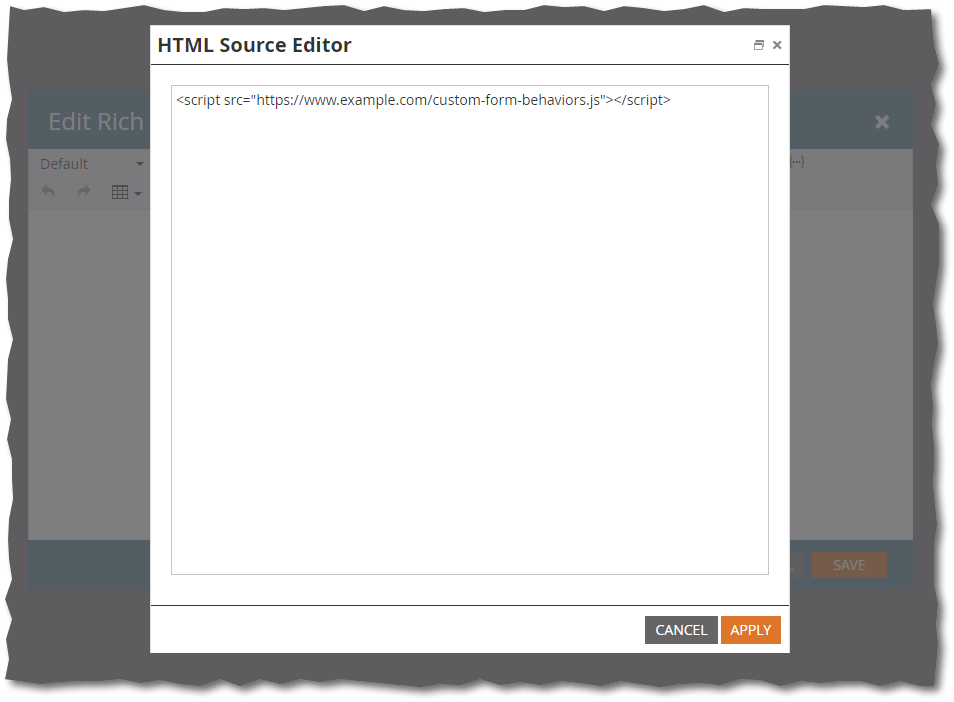

<!– /custom form behaviors JS and CSS -->Sometimes, users upload a .js file as recommended, but they try to put the <script> tag in a Rich Text box, thinking this will help the code travel wherever the embed goes:

It’s an understandable move, but has 2 major flaws:

- The code will always run multiple times because of the way the form DOM is built. I’ve written about this factor before. If you’re the dev, you can avoid it (i.e. set a flag so the meat of the code only runs once, otherwise exiting early) but if you forget, you can end up with confusing and/or catastrophic bugs.

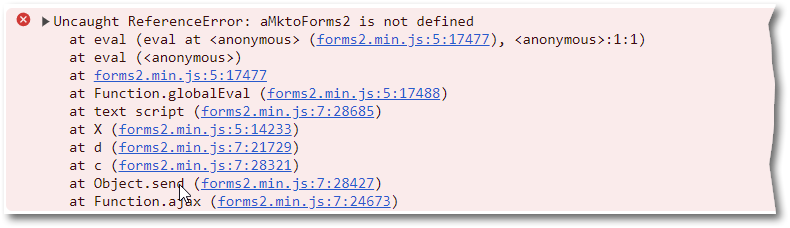

- If the JS file has an error and is on the same origin as the page, the form will fail to render at all. This one I’m exploring for the first time today, after it came up in a recent Nation thread.

I could just reiterate “Keep custom code out of the form!” and leave it at that, but there’s something fascinating about this particular bug that seems worth detailing for the learners out there.

How form descriptor (i.e. Form Editor) <script> tags are processed

HTML in a Form Rich Text — including <div> or <span> or <script> or any other tags — isn’t output directly into the HTML document the way it would be in a Landing Page or Email Rich Text block.

It can’t be, because the form descriptor is served as a JS object, so any HTML inside it starts out as a JS string.

The forms lib initially “hydrates” your custom HTML inside a Document Fragment representing the <form>, alongside the automatically created <div>, <input>, and <label> tags. After the <form> is rendered offline, its content is inserted into the main online document. (Offline rendering takes just a few milliseconds, and it’s smart to avoid a ton of browser reflows.)

So far so good. But <script> tags are special. You can’t run a <script> with innerHTML, e.g. this won’t work:

document.head.innerHTML += "<script>console.log('hi');</script>";All you’re doing there is inserting (yes, this is confusing!) a <script> element. But you’re not running the code inside it.

It’s a fascinating, age-old browser quirk. You need to treat script-injected scripts specially in order for code to run. There are a few options:

- If you can access the JS code itself, you can either:

- create a new element using

document.createElement("script")and set the code as itstextproperty

or eval()the code

- create a new element using

- If you only have the URL of the .js file and retrieving the code is blocked by CORS, you can only:

- create a new element using

document.createElement("script")and set the URL as itssrcproperty

- create a new element using

These all have largely the same outcome, except for the fact that 1a and 2a are asynchronous, while 1b blocks waiting for the eval() to finish successfully.**

When you inject a new script using text or src, even if the script code has an error, the outer code keeps running. For example, this deliberately bad injected “code” will throw a fatal error in the console, but the console.log() and anything after it will still run:

let myScript = document.createElement("script");

myScript.text = "this ain’t valid JS";

document.head.append(myScript);

console.log("script has been injected, hope it ran");In stark contrast, if eval() returns an immediate error***, then the outer script stops completely. Here, you’ll never see the console.log():

let myScript = "this ain’t valid JS";

eval(myScript);

console.log("script has been eval’d, hope it ran");Cross-origin and same-origin .js files are loaded differently

Here’s where the weird stuff happens.

If your .js file is hosted on the same origin as the main document — remember, origins like https://www.example.com and https://pages.example.com are different, so same-origin is far stricter than same-domain — then its content is guaranteed to be readable. Just like fetch() -ing a a same-origin .json or .csv file.

The exact same file loaded cross-origin wouldn’t block the form, while throwing a similar console error.

Making all .js sources behave like cross-origin

You can turn off eval for same-origin scripts using this ▒▒▒▒ option: ▒▒▒▒.▒▒▒▒▒▒▒ = ▒▒▒▒▒.

(Sorry, decided not to provide that workaround. The solution is to follow the general recommendation: all <script> tags go outside the form! 😛)

Afterthought: What’s the big deal anyway — isn’t it better to error out hard?

You might argue that breaking the form when JS bugs out is a stronger signal than a console error. True!

But custom JS behaviors may be merely cosmetic, like adding Bootstrap CSS classes. Or they may be important, like populating hidden UTM fields, yet not so critical that the form should be down while you fix the code.

You should strive for zero console errors as part of your QA process. So take any uncaught error seriously and you’ll catch these, too.

Notes

* On Marketo LPs, simply place custom <script> tags before the closing </body> tag. This is easier than finding exactly where the form embed(s) appears in the final document.

** The default scope is different, but that’s for another day.

*** You can run eval itself asynchronously using setTimeout, in which case the error won’t block, but that’s a different case.